Acoustics Emanation Tool

Mechanical PIN-Entry keypads such as the used in secure payment terminals, ATMs, keypad lock on door, etc; can be vulnerable to attacks based on differentiating the sound emanated by different keys. The sound of button clicks can differ slightly from key to key, although the sound of clicks sound very similar to the human hear. Several research studies have demonstrated that it is possible to recover the typed data from the acoustic emanations and has been a known source of concern and present a threat to user privacy; see the References section below.

Besides, keypad emanations are specifically tested in the PCI SSC PTS security-testing process required for the approval of secure payment terminals. The "Monitoring During PIN Entry" testing requirement, verifies that there is no feasible way to determine any entered PIN digit by monitoring sound, electro-magnetic emissions, power consumption or any other external characteristic available for monitoring.

Precisely, these testing requirements lead me to develop the experimental Acoustic Emanations Tool as support to facilitate pre-compliance testing of secure payment terminals with mechanical keypad for PIN-Entry or other similar devices. Also, this development was motivated to introduce me to Deep Learning techniques.

Besides, keypad emanations are specifically tested in the PCI SSC PTS security-testing process required for the approval of secure payment terminals. The "Monitoring During PIN Entry" testing requirement, verifies that there is no feasible way to determine any entered PIN digit by monitoring sound, electro-magnetic emissions, power consumption or any other external characteristic available for monitoring.

Precisely, these testing requirements lead me to develop the experimental Acoustic Emanations Tool as support to facilitate pre-compliance testing of secure payment terminals with mechanical keypad for PIN-Entry or other similar devices. Also, this development was motivated to introduce me to Deep Learning techniques.

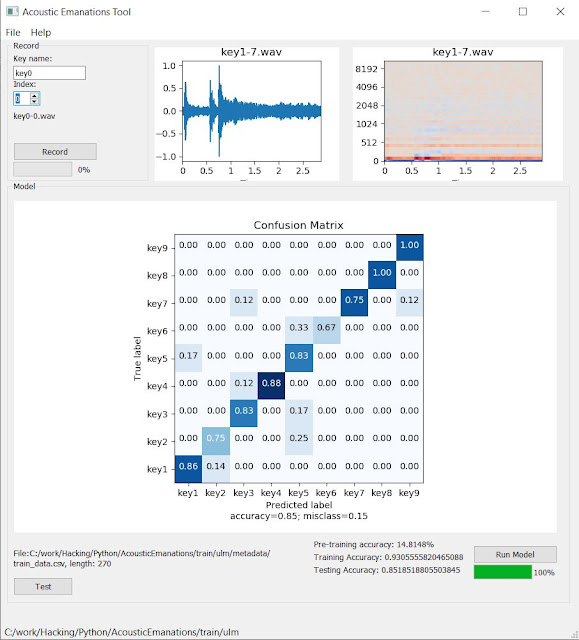

The tool has an interactive user interface using PyQt5 and has been tailored to facilitate the acquisition of training data. The implemented classification algorithms have been based on Udacity Capstone Project, which applies Deep Learning techniques for automatic environmental sound classification. The initial revision of the tool v0.1, uses Mel-Frequency

Cepstral Coefficients (MFCC) for features extraction and a Multi-Layer Perceptrons (MLPs) neural network architecture for classification.

The tool facilitates the acquisition of the key presses sounds for the training of the neural network and provides the results in the form of a confusion matrix, which will help to determine if the keypad would be vulnerable to eavesdropping and which specific keys may be more easily identifiable.

Tool Installation

The tool is a Python 3.7 application, so in case you have a Python enviroment, you can clone the project from https://github.com/EA4FRB/AcousticEmanations.git and run directly the script: AcousticEmanations.py, available in the src folder. You would need to install all necessary dependences available in requirements.txt file, if not previously installed.

An alternative for Windows OS is to use the provided installer available at https://github.com/EA4FRB/AcousticEmanations/releases. Note that the resulting installation uses a large amount of disk space (around 1.6GB), mainly due to the installation of Tensorflow engine. If you have Tensorflow and Python, you would prefer to launch the tool from Python directly.

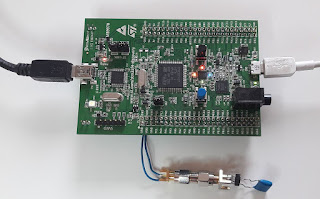

To demonstrate the operation of the tool, I will use a device that it is not intended and not a concern for sensitive data entry, but it will be representative for the tested function. The device is the SARK-110-ULM antenna analyzer, which has nine mechanical buttons for user inputs. The buttons are implemented with metal dome

switch technology that provide tactility and audible feedback or

"snap", when pressed.

The training data capture must be performed in a quite environment and you should use a good microphone for capturing the audio. Be sure that you have properly configured the sound settings in your computer and perform some tests in advance using e.g. Audacity tool to verify the operation of the setup.

The first step for starting the capture of training data for a specific device will be to select a folder for storing the data. Select menu <File> <Folder Select> and create or select the appropiate folder using the popup file dialog. The tool will use this folder as a parent to store the following information:

- .\wav: the audio files used for training

- .\metadata: train_data.csv metadata file with the information of the audio files

- .\saved_models: features.csv file with the extracted features, and weights.best.basic_mlp.hdf5 saved model file

Now we can start with the recording of the audio samples for every key pressed. We will have to capture multiple training samples for each key.

For this purpose we have to look at the "Record" group box. For each key, we must enter first a name to identify the key, e.g. 'key1'. The "Index" will be reset to zero everytime that a new key name is entered and will be increased automatically for every key recording. The index can be changed, e.g. in case you notice that the previous recording was not correct. The wav file name will comprise the key name and the index and this is shown in this group box.

For starting the recording of a single key press, press the "Record" button. The recording time will be three seconds and the progress will be shown in the progress bar below. You must press the key during this recording period. If the recording fails for whatever reason, decrease the index value and repeat the recording.

At the completion of the recording of one key press, the file will be saved and the tool will show a chart with the time domain capture of the key press and a chart with the MFCC. The software automatically scales and trims the leading and trailing silences to focus on the key click. Repeat this process several times for every key and then for all the keys. In this particular example, I have captured 30 recordings for each key (a total of 270 recordings).

Note that you can examine a recorded file at any moment, from menu <File> <Load wave file>.

After completing the recording process, you can run the model to let the "magic" happen. Press the button "Run Model" and after some seconds you will see the results. Note that the results can change slightly if the model is executed several times, because random data is used for testing.

The most important output of the model is the confusion matrix; expressed in normalized form in this case. The diagonal elements represent the number of points for which the

predicted label is equal to the true label, while off-diagonal elements

are those that are mislabeled by the classifier. The higher the diagonal

values of the confusion matrix the better, indicating many correct

predictions, which in turns means that the keypad sounds are not uniform and vulnerable to eavesdropping.

The results in this particular device show that the model will be able to predict key presses with an average accuracy of 80% and keys 8 and 9 can be predicted with a 100% accuracy. These results would disqualify this keypad to be used as a secure keypad.

Another functionality of the tool is to test one recording against the model. Press the "Test" button, select the wav file in the popup file dialog and the tool will show the message box shown below. In this example, I used one of the wav files used for training that classifies as key1 as expected.

Conclusion

This post entry presented the initial revision of the experimental, open source, accoustic emanation tool. This served me as an introduction to deep learning techniques and I expect that will be useful for the validation of keypads used for secure applications.

References

- Udacity Capstone Project, https://github.com/mikesmales/Udacity-ML-Capstone

- Dictionary Attacks Using Keyboard Acoustic Emanations, https://www.eng.tau.ac.il/~yash/p245-berger.pdf

- Keyboard Acoustic Emanations, https://rakesh.agrawal-family.com/papers/ssp04kba.pdf

- A Closer Look at Keyboard Acoustic Emanations: Random Passwords, Typing Styles and Decoding Techniques, https://eprint.iacr.org/2010/605.pdf

Comments

Post a Comment